Performance characteristics of the DelftBlue 'compute' nodes¶

The majority of applications in CS&E and data science are limited by floating point operations (compute bound) or by the memory bandwidth (memory bound). We therefore focus on the floating point units and memory subsystem of the CPUs here.

Phase 2 nodes (Intel Xeon 6448Y "Sapphire Rapids")¶

Below we describe the "Phase 1" hardware in detail. The newer compute nodes are quipped with 64 (instead of 48) cores and run at between 2.1 and 4.1 GHz, and with a higher peak performance (5.7 TFlop/s) memory bandwidth (400 GB/s). Otherwise, the setup is very similar except that this generation of processors is equipped with AMX instructions to boost compute-intensive applications in half-precision (typically machine learning) to up to 45 TFlop/s. [Benchmark results can be found in this report.

Floating point performance¶

- Each compute node in DelftBlue (labelled cmp[XXX]) has two CPU sockets with 24-core Intel Xeon 2648R (`Cascade Lake') processors

- the base CPU frequency is 3.0GHz. For single-core applications, this can be increased up to 4.0 GHz (turbo mode).

- Each CPU core has 2 floating point units capable of 4 (AVX2) or 8 (AVX512) double precision 'fused multiply-add' operations per cycle.

- AVX512 operations run at a lower CPU frequency, determined experimentally to be 2.5GHz.

- In total, this gives a peak performance of \(32*48*2.5=3840\) GFlop/s for AVX512, and \(16*48*3.0=2304\) GFlop/s for AVX2. Note that this is assuming a 1:1 ratio of adds and mults, and a sufficiently long loop of calculations to keep the pipeline filled.

Memory bandwidth¶

- Each socket has two NUMA domains, which means that threads may encounter different speeds for memory accesses depending on where the data is located. When data in a NUMA domain is frequently accessed by a thread outside that domain, however, the CPU may relocate the memory automatically, which in many cases mitigates the problem.

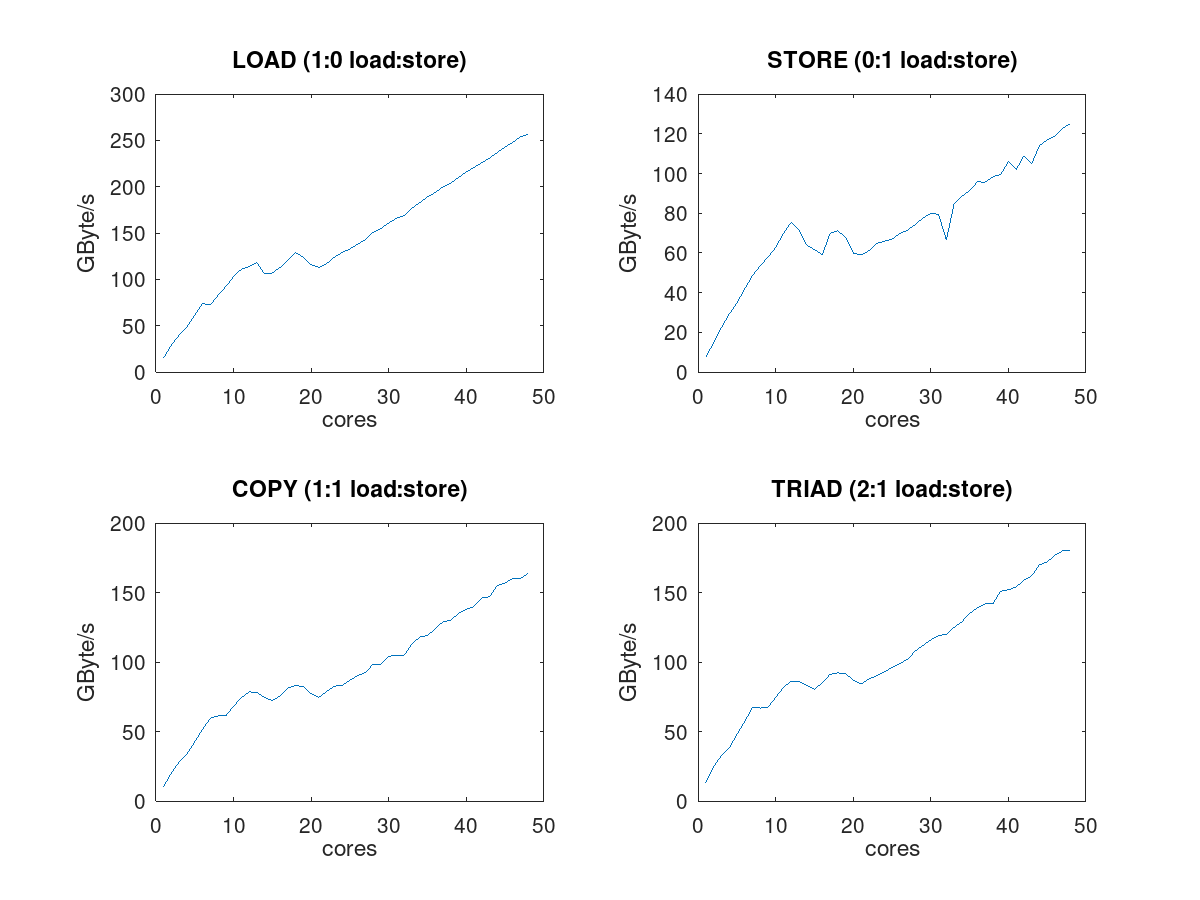

- The bandwidths achieved for different types of access patterns are shown the figure below. The 'load' and 'store' benchmarks show that the memory bandwidth for reading is significantly higher than for writing data back to RAM. Symmetric load/store (copy benchmark) and a ratio of 2:1 (triad benchmark) deliver performance between the two extremes.

Depending on the load:store ratio, we measured for the complete node (48 cores) the following values in GB/s (with a data set size of 1GB and AVX512 operations).

| LOAD (1:0) | STORE (0:1) | COPY (1:1) | TRIAD (2:1) |

|---|---|---|---|

| 256 | 125 | 165 | 184 |

Detailed measurements with a varying number of threads are shown below. The irregular behavior indicates the importance of mapping the threads to the different NUMA domains in order to achieve high memory bandwidth with as few cores as possible if your application is memory bandwidth bound.

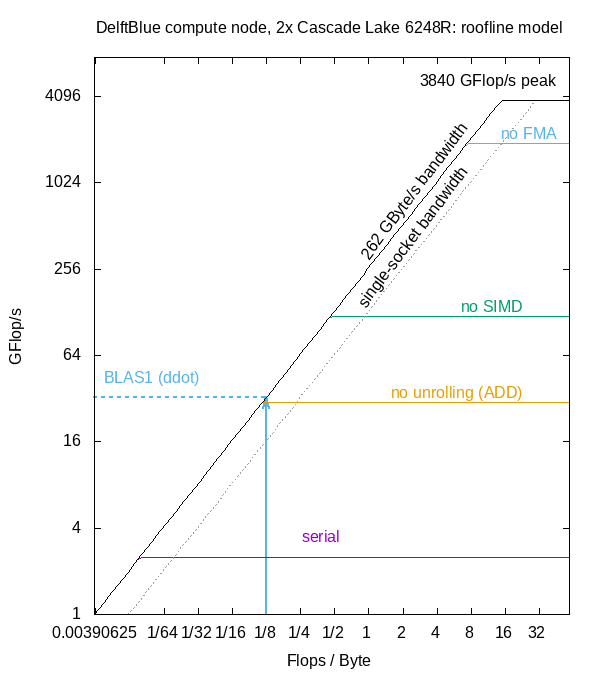

Roofline model¶

When investigating the performance of your application, you can consult the roofline model plotted below to determine the applicable peak performance. According to the roofline model 1, performance is either limited by the memory bandwidth (to the left of the kink), or by the peak floating point performance, depending on the computational intensity \(I_c=\frac{Flops}/{Byte}\) of the application. To determine this value for your application,

- identify the region(s) where most time is spent, e.g. using a profiling tool like gprof,

- either count the floating point operations and bytes of memory used in that region, or measure them using e.g. likwid,

- compute \(I_c\) and read off the applicable peak performance from the plot.

In the plot, the vertical lines indicate the applicable peak floating point performance, for example for a serial job, for the case where

- only a single core is used (serial)

- the pipeline is not filled (no unrolling ADD: for example, summing the elements of a large array),

- no SIMD vectorization (AVX2, AVX512) is possible

- no Fused Multiply-Add (FMA) operations can be exploited

The memory bandwidth used to generate this diagram is 132 GByte/s per socket, which is the ideal (vendor specified) load bandwidth. Depending on the load:store ratio in the kernel, and the size of the data set (e.g. if it fits into the 36MB L3 cache), another bandwidth should be used, see above.

Some MPI benchmarks¶

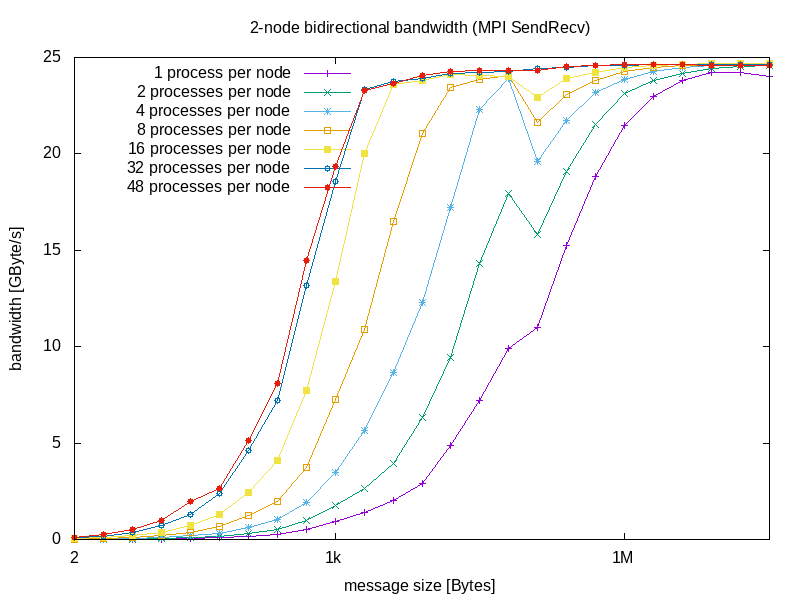

Each compute node of DelftBlue has a 100Gbit/s InfiniBand card, amounting to a total maximum bidirectional bandwidth between two nodes of 25GByte/s when sending and receiving between two nodes.

The following plot shows the bandwidth achieved by an MPI_Sendrecv operation between two nodes, depending on the message size. This benchmark was carried out on an empty machine: in operational mode the bandwidth may depend on the location of the nodes in the network topology and the overall load on the network.

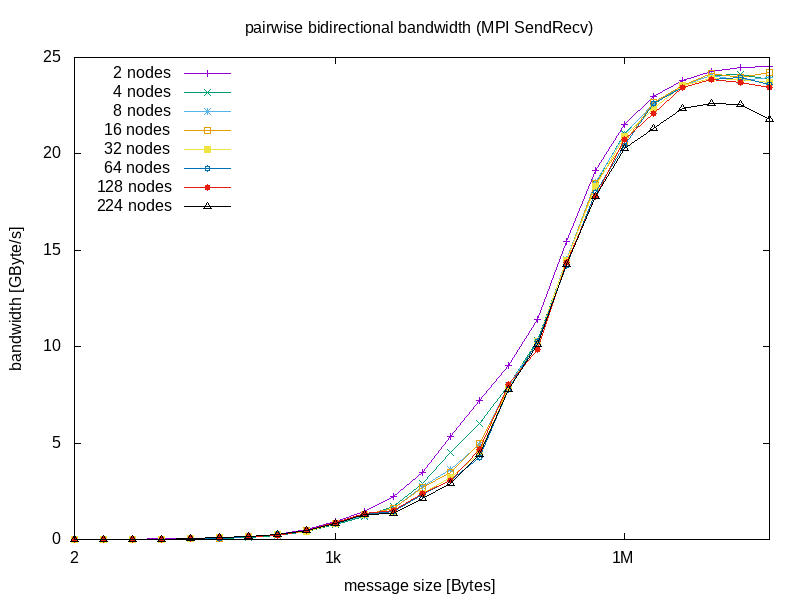

On the full machine (224 nodes) with one MPI process per node and simultaneous pairwise MPI_Sendrecvs, the bidirectional bandwidth (depending on the message size) is shown here:

From these measurements one can determine some interesting network parmeters:

- The latency for sending a small message is approximately 1.2 microseconds

- The maximum bandwidth between two nodes is 12.5GB/s (one direction) or 25GB/s (full duplexing)

- the maximum bidirectional cross-section bandwidth for all Phase-1 nodes is about 5TB/s (that is, the maximum bandwidth achievable if one half of the machine communicates simultaneously with the other).

-

[Williams et al.(2009)] S Williams et al. Roofline: An insightful visual performance model for multicore architectures. Commun. ACM. 52(4):65–76, April 2009. doi: 10.1145/1498765.1498785. ↩