Abaqus¶

Abaqus can work in both multithreading and multiprocessing (MPI) modes. Below, you can find examples of submission scripts for both modes.

Multiprocessing job (MPI)¶

1. Load module

This will enable a bunch of abaqus-related commands:

2. Submit abaqus job

If you want to run Abaqus with multiprocessing on a single node, you can submit the following script:

#!/bin/bash

#

#SBATCH --job-name="SC8R_S1_DMB_R"

#SBATCH --time=108:00:00

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=16

#SBATCH --cpus-per-task=1

#SBATCH --partition=compute

#SBATCH --mem-per-cpu=4GB

#SBATCH --account=research-<faculty>-<department>

#

JOBNAME=SC8R_S1_DMB_R1

module load abaqus

cd $SLURM_SUBMIT_DIR

TMP=$SLURM_SUBMIT_DIR/results_$JOBNAME

mkdir -p $TMP

cp $JOBNAME.inp $JOBNAME.py $SLURM_SUBMIT_DIR/ $TMP

cd $TMP

abq2022 job=$JOBNAME input=$JOBNAME.inp cpus=$SLURM_NPROCS mp_mode=mpi interactive

abq2022 cae noGUI=$JOBNAME.py

Multithreading job¶

1. Load module

This will enable a bunch of abaqus-related commands:

2. Submit abaqus job

If you want to run Abaqus with shared memory on a single node, you can submit the following script:

#!/bin/sh

#SBATCH --job-name="abaqus"

#SBATCH --partition=compute

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=1

#SBATCH --cpus-per-task=4

#SBATCH --time=08:00:00

#SBATCH --mem-per-cpu=2G

#SBATCH --account=research-<faculty>-<department>

module load abaqus

abq2022 cpus=$SLURM_NPROCS mp_mode=threads [all your other abaqus parameters here...]

Running Abaqus interactivly with GUI on a visual node¶

1. Open remote connection to the visual node

Detailed discussion about visualization nodes can be found here.

a. Connect to DelftBlue and start a "desktop" job:

$ module load 2023r1

$ module load desktop

$ vnc_desktop 2h -- --cpus-per-task=8 --mem=16G --account=research-faculty-department

As a result, you should get a message along these lines:

Reserving a GPU node (partition "visual")

SLURM job ID is 912918

Waiting for job to start running on a node

We got assigned node visual01, waiting for output...

Waiting until VNC server is ready for connection...

VNC server visual01:1 ready for use!

Check which "port number" the system allocated to you, here "visual01:1", i.e. port "1".

b. Open a tunnel to the port above

Open a separate terminal window and open a tunnel to the corresponding port:

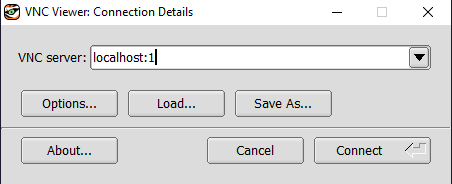

c. Connect your VNC client to the local port corresponding to the open tunnel

or graphically:

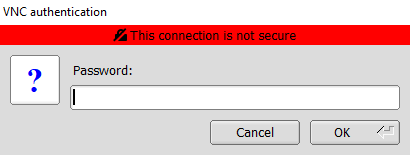

You should be now prompted with your VNC password (not your NetID password!):

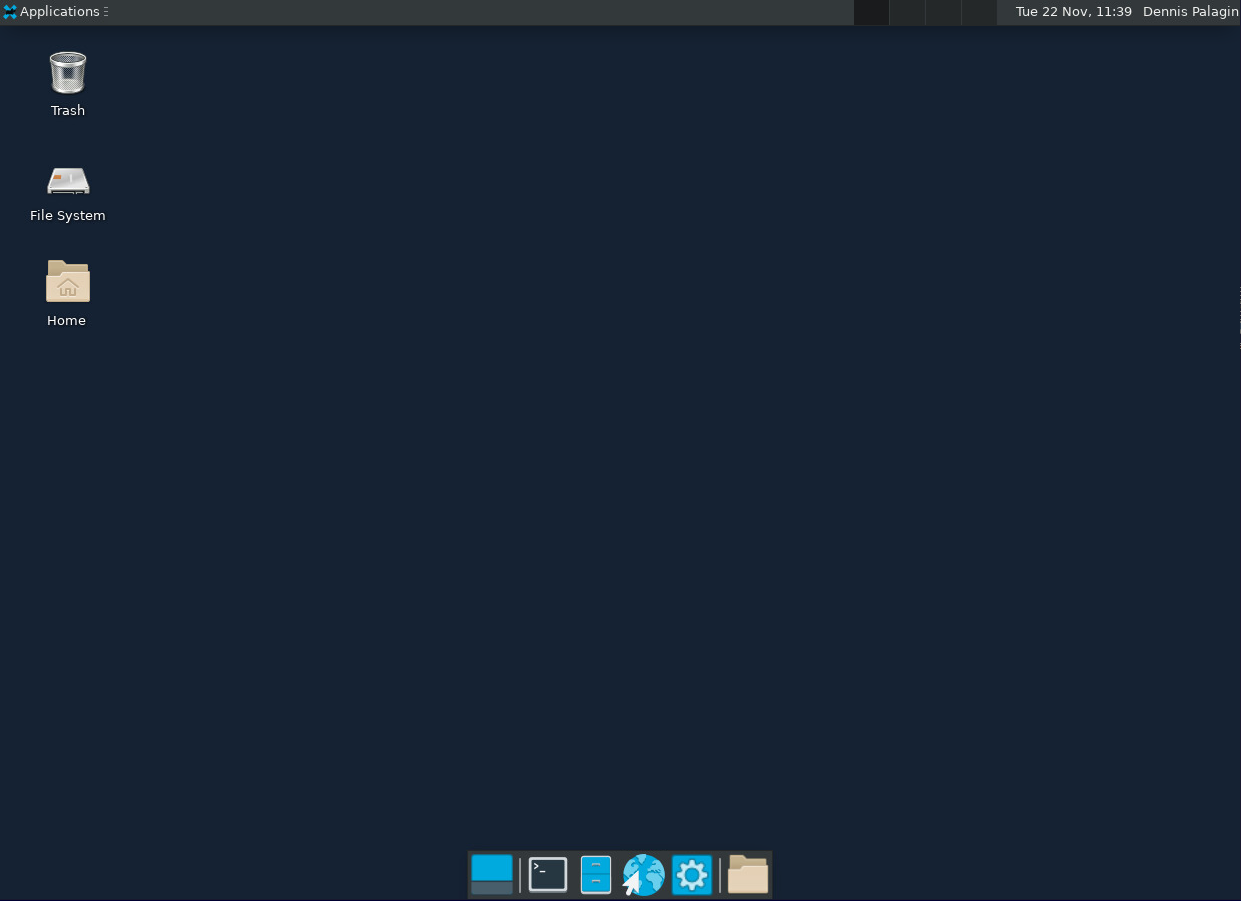

Finally, we have our remote desktop up and running:

2. Load necessary modules and run Abaqus GUI

Inside of the remote desktop, open a terminal and type the following:

module load 2023r1

module load visual

module load qt

module load abaqus

module load virtualgl

vglrun abq cae

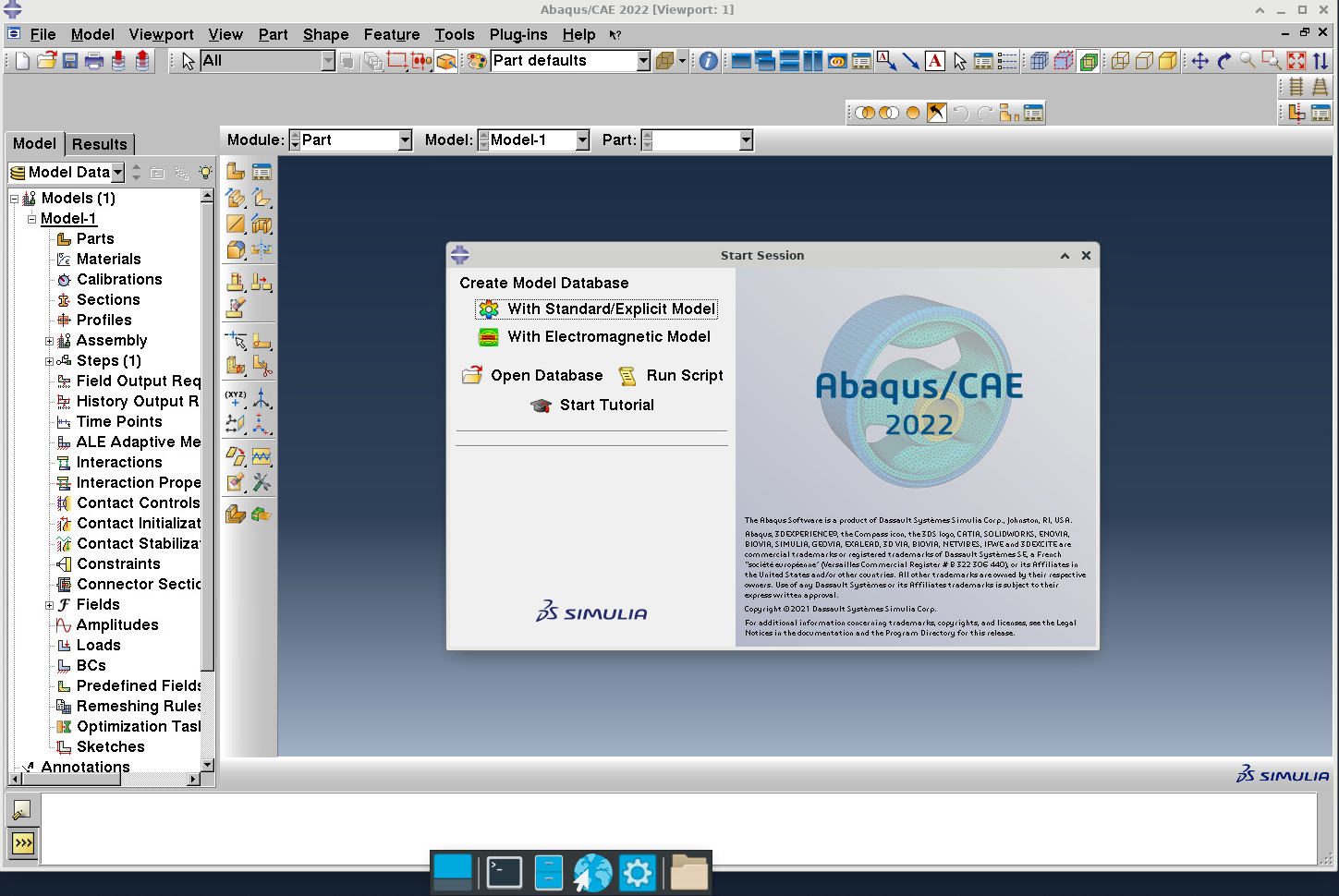

You should see the following screen:

You are good to go now.