FAQ¶

I am unhappy with my disk quota, job time limitations, the number of GPUs I can use, etc.

We are implementing these policies not in order to annoy users, but in order to be (and remain) useful for as many users as possible. The policies are not set in stone, though, and your feedback is very welcome so that we can keep improving the system. For more information on why we made these policies, see here.How exactly is the priority for my job calculated?

This question is hard to answer. Roughly, the priority is calculated based on your previous usage, the overal usage of the account (i.e., of all users in the research/education/project account you use), and the waiting time of the job so far. **However**, priority is not the only aspect determining when your job will run: For example, short-running jobs that can be spread over cores on multiple nodes may get ahead by so-called "backfill scheduling" without pushing back other jobs. The configuration of the Slurm scheduler on DelftBlue is maintained by our experienced HPC admin team. It is completely transparent, and if you want to know details, you can use the commandscontrol show configon DelftBlue to get the full list of settings: At the time of writing, for example, there are 17 parameters containing Priority, just to illustrate why we do not give you an "exact" answer. Information on what these parameters mean can be found in the Slurm scheduling configuration guide.Is DelftBlue the right machine for me?

It depends on what exactly you are trying to do. DelftBlue is a supercomputer, intended to allow scaling up applications. It may require a significant effort to get started if you are not used to working on HPC systems. TU Delft also provides a range of different services, including personal workstations, physical or virtual machine servers, cloud services, faculty based local servers, and many others. If you are looking for an IT solution, but not sure what exactly is the best option for you, talk to your faculty's IT managerI am new to Linux. Help!

The basics of working with Linux and remote systems can be relatively easily self-learned with online materials. Start with checking out the relevant Software Carpentry courses:- Linux command-line basics,

- Linux command line (more advanced material)],

- Introduction to High-Performance Computing.

Where can I find courses on using and programming HPC systems like DelftBlue?

- DCSE courses are generally run on DelftBlue

- SURF courses, in particular the "Intruduction to Supercomputing" series are geared toward the national computing infrastructure, but it is reasonably similar to DelftBlue.

How do I get access?

By default, every employee with a <:netid> is able to log in to DelftBlue and run jobs as a guest. However, your jobs will run with relatively low priority, and if the machine is busy, it may take a long time until they get scheduled. To be able to run jobs with high priority, you can request access to your faculty's share. To get full access, please use the form "Request Access" in the self-service portal.Where do I get help?

For technical issues, please use the TU Delft Self-Service Portal DelftBlue category under "Research support". For discussions about specific software, compilers, etc., as well as exchanging the knowledge with other users, please use the DHPC Mattermost forum.I can not log in

Are you a student? If yes, you have to explicitly request access local servers and clusters. For example, the INSY department is operating their own machine, known as DAIC. Make sure you are trying to connect to the right system! Check the correct address of DelftBlue (login.delftblue.tudelft.nl), the correct port (22), and the correct username (your <:netid>). If you are using the SSH config file, check that it has been set up correctly. When you cannot make a connection (no prompt for username or password at all), check the Remote access to DelftBlue page for the ways to access DelftBlue from the outside world. If you are outside of campus, use EduVPN! When you receive an authentication failure when you enter your password, check if you are using the correct <:netid> and password. For example, try to login to the TU Delft Webmail. Finally, if all the above points are resolved, check the status of DelftBlue on the web page of the Delft High Performance Computing Center. Make sure the system is up, and there are no interruptions or planned maintenance.I can log in, but I don't have a /home folder

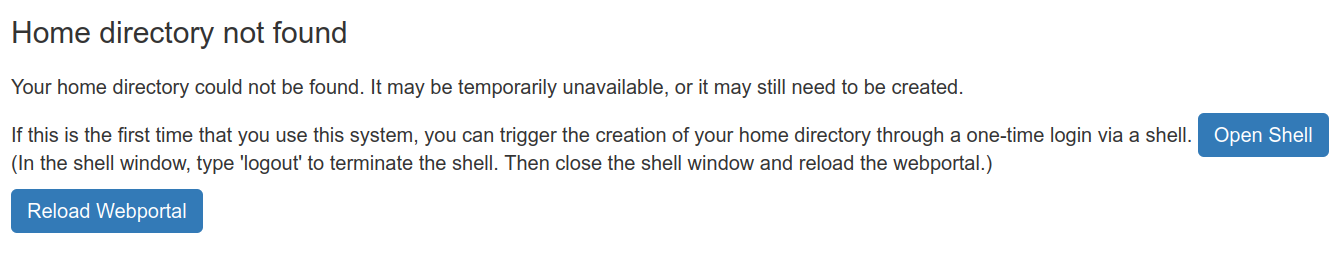

/home and /scratch folders for new users automatically. If your first ever login to DelftBlue is via OpenOnDemand, you should get the following warning: What you should do then is to click Clusters and then DelftBlue Cluster Shell Access to trigger the "normal" SSH connection, logout of this shell by typing logout, and finally click "Reload Webportal". Now you should be able to access your /home and /scratch directories via OpenOnDemand.

What you should do then is to click Clusters and then DelftBlue Cluster Shell Access to trigger the "normal" SSH connection, logout of this shell by typing logout, and finally click "Reload Webportal". Now you should be able to access your /home and /scratch directories via OpenOnDemand.

I can log in, but I cannot submit a job

You should be able to submit jobs to the queue using the sbatch command (see here). If there are any specific errors in your submission script, those should either be displayed in your terminal window, or in the slurm-XXX.out file. Please note that the OpenOnDemand web interface does not have a working job template at the moment! If you use the web interface, make sure to copy the correct #SBATCH commands from one of the examples in the documentation!Why does my job not run?

Every user with a <netid> is able to log in to DelftBlue. However, your jobs will run with rather low priority in the innovation account, and if the machine is busy, you may have to wait for several days. You can request access to your faculty's research share via this form in the Self-service portal. If you are a registered user and your jobs still do not get scheduled within a day or so, the column REASON in the output of squeue --me will give you a clue. The Resources you request may not be available at the moment, or they are reserved for users with higher Priority (see FAQ item 2 above). Note that the resources you request are determined by the job script and default settings for options you omit, and may not always be obvious to you: For example, if you specify --ntasks=1 --cpus-per-task=1 --mem-per-cpu=100GB, this job would occupy about half a node (24 cores in Phase 1) because of the high memory usage, even if only one core (2 percent of the CPUs) is being requested. Finally, a personal or group limit may apply. These are implemented to ensure fair scheduling of jobs. For details see the Slurm documentation on job reason codes.Can I get an estimate when my job will run?

If your job is already in the queue and pending, you can use ``squeue --start`` to get a rough estimate. This will only output a predicted starting time if the job is already waiting for resources. You can also get a prediction before submitting the job, for example to evaluate which partition to use or how much memory to ask per CPU to get the job started sooner. The command ``sbatch --test-only`` prints the estimated start time (based on all information available at that moment) without actually submitting the job. Can I login to the node(s) on which my job is running?

Sometimes you may want to check how your job is running using commands like 'top', 'nvidia-smi', etc. In order to get a console "inside your slurm job", first find the job's ID using

and then start an interactive bash session as follows (here for 30 minutes):squeue --me

See the SLURM page for details on interactive jobs.srun --jobid=<ID> --overlap --pty -t 00:30:00 bashI can not find and/or load software modules

We provide two versions of the software stack, typically you should pick the latest one: 2023r1 in this example:

To make the contents of the software stack 2023r1 available, use:[<netid>@login03 ~]$ module avail ------------------------------------- /apps/noarch/modulefiles ------------------------------------- 2022r2 2023r1

This system is based on lmod. The module organisation is hierarchical. This means that the modules you see depend on the ones you loaded: In particular, if you don't load openmpi you don't see modules like hdf5 that depend on it. To find modules in the hierarchy, you can use the module spider command. Check out the DHPC modules page for more info.module load 2023r1After loading certain modules, the nano (editor) command does not work anymore

This is caused by incompatible libraries in the search path. You can avoid it by putting the following line in your .bashrc file, and running exec bash to refresh the active session:alias nano='LD_PRELOAD=/lib64/libz.so.1:/lib64/libncursesw.so.6:/lib64/libtinfo.so.6 /usr/bin/nano'I compiled my code, but it fails with Illegal Instruction errors on some nodes

Please be aware that most nodes have Intel CPUs, except forgpu-v100 nodes, which use AMD CPUs (see the DHPC Hardware page for details. Depending on your application, you might need to compile two different versions of your program to run it on respective nodes. "Phase-2" nodes have a later generation of Intel CPUs, which may also cause code to be generated that does not run on the older hardware. Please also note that available software modules for compute and GPU nodes might be different for this reason.I have difficulties with compiling a specific software package

Many software packages are already available on DelftBlue as software modules](DHPC-modules.md). Instructions for certain frequently used packages can be found in the [Howto Guides.Intel compiler takes forever or does not work at all

TU Delft's license for Intel compiler suite allows for 5 users to compile their software simultaneously. If your ifort compilation takes longer than expected, or does not start at all, try again in a bit. If it still does not work, contact us. Click here for more information.Slurm fails with out-of-memory (OOM) error

You might encounter the following error when submitting jobs via slurm:

You need to set the --mem-per-cpu value in the submission script. This value is the amount of memory in MB that slurm allocates per requested CPU core. It defaults to 1 MB. If your job's memory use exceeds this, the job gets killed with an OOM error message. Set this value to a reasonable amount (i.e. the expected memory use with a little bit added for some head room). Example: Add the following line to the submission script:slurmstepd: error: Detected 2 oom-kill event(s) in StepId=1170.0. Some of your processes may have been killed by the cgroup out-of-memory handler.

Which allocates 1 GB per CPU core. Click here for more information.#SBATCH --mem-per-cpu=1GMy Intel-compiled code runs normally on login node, but is super slow upon submission to the queue

For the Intel MPI to be able to operate correctly, it must be configured to work together with Slurm. Otherwise the Intel MPI may bind all requested threads to the same CPU. To configure the Intel MPI to work with Slurm, set Intel MPI to use the Slurm PMI interface, and use srun instead of mpirun. Use the following in your submission script:

Then invoke your binary with srun. Typically there is no need to specify arguments as they are taken from the SBATCH cammands in the job script by default.export I_MPI_PMI_LIBRARY=/cm/shared/apps/slurm/current/lib64/libpmi2.sosrun ./my_programI cannot access my files on the TU Delft home, bulk, umbrella drives

On the login and transfer nodes, these drives are mounted under /tudelft.net. On all other nodes, they are not directly accessible. If you get the error message 'permission denied' when accessing your directories under /tudelft.net on the login or transfer nodes, this is likely caused by an expired kerberos ticket. You can refresh your kerberos ticket by typing 'kinit' and entering your password upon request. Details can be found hereI would like to ensure that I remove my data from the local disk when the job ends

Data in the local /tmp storage on a node is not removed automatically when a job ends. The job needs to explicitly remove any data that it stores there. If it doesn't, other jobs won't be able to use that space and may fail.

When a job times out or is cancelled, the job script stops execution completely, and any cleanup commands at the end of the script won't run. Instead, use the code in the example job script below to automatically clean up on exit or cancellation.

#!/bin/bash # Your sbatch specifications go at the top #SBATCH ... # Specify and create a unique local temporary folder location tmpdir="/tmp/${USER}/${SLURM_JOBID}" /usr/bin/mkdir --parents "$tmpdir" && echo "$tmpdir created successfully." # Clean up the local folder when the job exits (even when it times out or is cancelled) function clean_up { /usr/bin/rm --recursive --force "$tmpdir" && echo "Clean up of $tmpdir completed successfully." exit } trap 'clean_up' EXIT # Your own script goes below # You need to explicitly specify and use this "$tmpdir" location in your code! # (Below are some example lines, uncomment and modify if you want to use them.) #/usr/bin/cp "/scratch/${USER}/some_file" "${tmpdir}/some_file" #srun <your command> ... <option to specify temporary folder> "$tmpdir" ... #/usr/bin/mv "${tmpdir}/result_file" "${HOME}/result_file_run123"