Transfer Data¶

DelftBlue has limited disk space, both in your /home directory and on the scratch disk (/scratch).

In order to move large files to and from the machine, you can use the linux tools described below

on the login nodes, which have access to the TU Delft central storage.

Command line tools¶

Note for Windows users

Windows 10 or higher supports the ssh and scp commands in the Command Prompt out-of-the-box. You do not necessarily need to install any third-party software.

scp¶

The simplest way to transfer your files to and from DelftBlue is to use the scp command, which has the following basic syntax:

For example, to transfer a file from your computer to DelftBlue:

user@laptop:~$ scp -p mylocalfile <netid>@login.delftblue.tudelft.nl:~/destination_folder_on_DelftBlue/

To transfer a folder (recursively) from your computer to DelftBlue:

user@laptop:~$ scp -pr mylocalfolder <netid>@login.delftblue.tudelft.nl:~/destination_folder_on_DelftBlue/

To transfer a file from DelftBlue to your computer:

To transfer a folder from DelftBlue to your computer:

user@laptop:~$ scp -pr <netid>@login.delftblue.tudelft.nl:~/origin_folder_on_DelftBlue/remotefolder ./

The above commands will work from either the university network, or when using EduVPN with "Institute Access". To "jump" via a TU Delft Linux bastion server, modify the above commands by replacing scp with scp -J <netid>@linux-bastion.tudelft.nl and keep the rest of the command as before.

Note for students

Students need to use the student-linux.tudelft.nl bastion server instead of the linux-bastion.tudelft.nl!

rsync¶

A more powerful alternative to scp is a command rsync. rsync does not just blindly copy files, but instead synchronizes the source with the destination, only copying the files and folders that were modified, preserving all other attributes (such as file modes and timestamps).

The basic syntax is similar to scp, with additional possible arguments:

In this example, the -av flags instruct the command to be verbose (-v), i.e. show explicitly what it is doing, and to use the "archive" mode (-a), i.e. archive whole folders, only copying modified files while preserving file attributes.

Note

Some file/folder permissions might not be compatible with project drives, e.g. on staff-umbrella, due to the fact that these are based on Windows file system access control. If you get rsync: failed to set permissions error when e.g. rsync'ing to your project drive, please use the --no-perms flag, e.g.:

sshfs¶

It is also possible to "mount" DelftBlue folders to your own Linux computer. For this, you will need to do the following (assuming, you are either inside of the university network, or connected via eduVPN).

-

Create a local folder, which will work as DelftBlue mounting point:

-

Mount the remote folder:

-

To unmount:

-

It is also possible to do this without being inside of the university network (eduVPN). For this, you will need to set a local port forwarding via

linux-bastion, and thensshfsvia this port, for example:-

Open one terminal window and set local port forwarding:

-

Keep the first terminal window open, and launch another terminal. In the second terminal, mount your folder:

-

Graphical tools¶

Windows users¶

For easy file transfer, a graphical SFTP client might be a good idea (e.g. WinSCP or FileZilla).

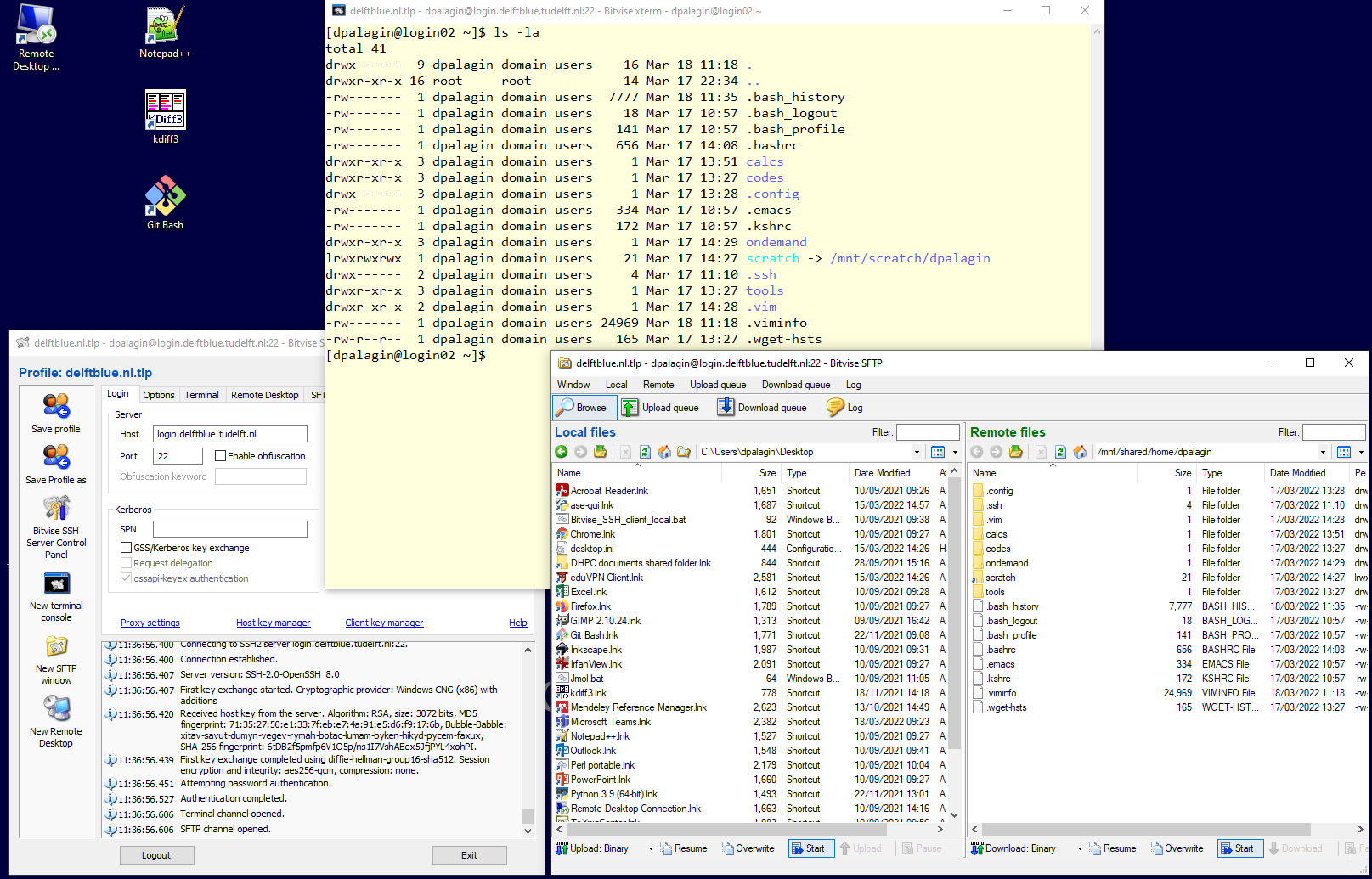

A convenient all-in-one solution is a free SSH/SFTP Bitvise SSH Client. It combines a terminal and a SFTP file manager:

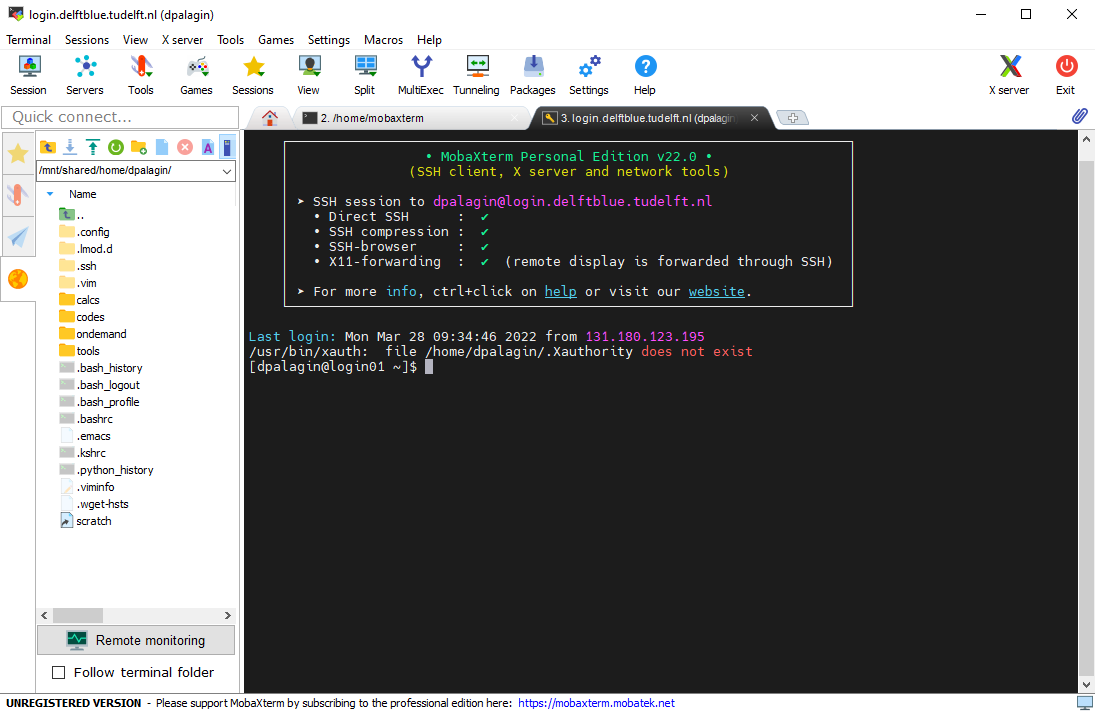

MobaXterm is another (often used) all-in-one solution. The SFTP file manager is shown on the left panel:

Important

Beware that most of the above software will need direct access (either from the university network, or via EduVPN).

Linux users¶

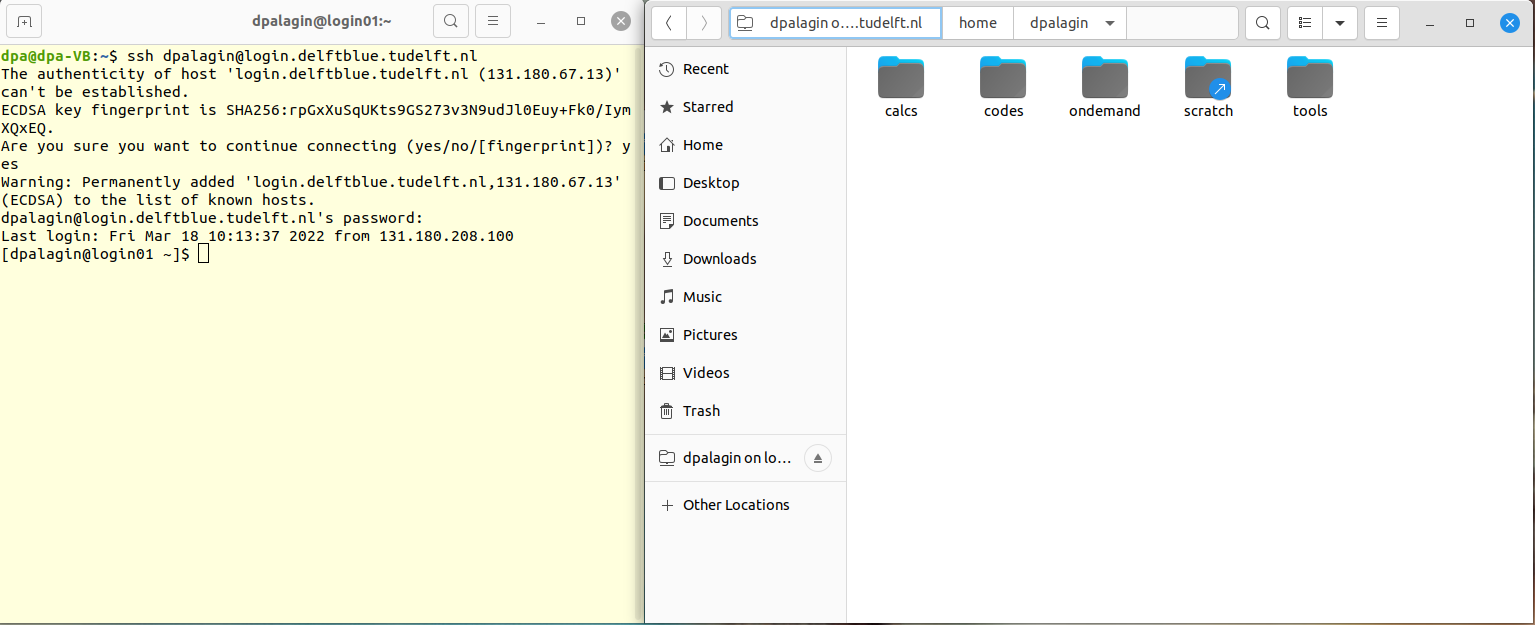

Most default Linux file managers support SFTP out of the box. Just put sftp://<netid>@login.delftblue.tudelft.nl in the address bar:

Important

Beware that most of the above software will need direct access (either from the university network, or via EduVPN).

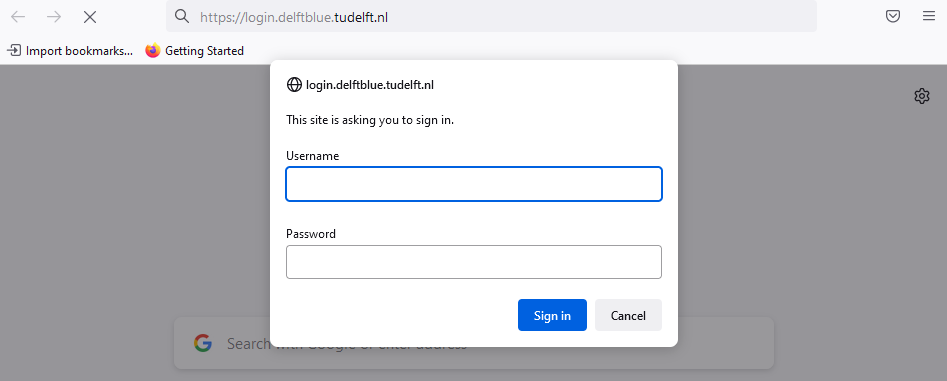

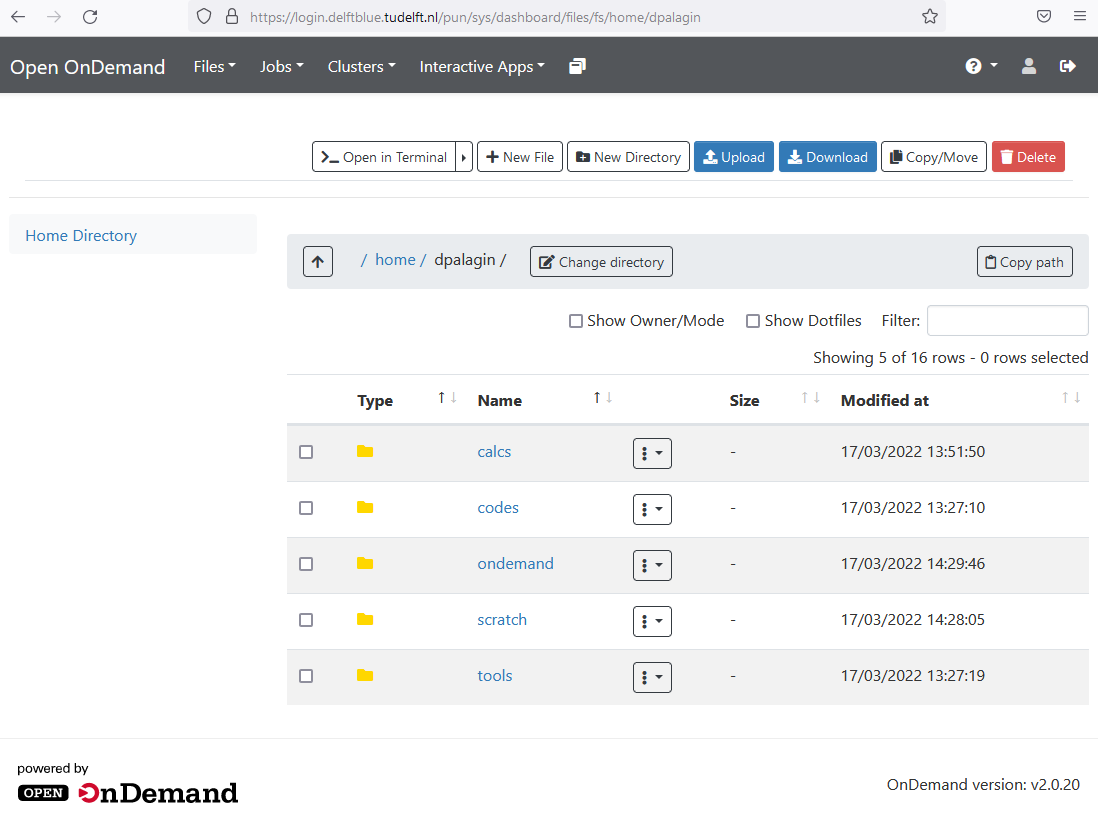

Open OnDemand web-interface¶

Alternatively, a Web interface called "Open OnDemand" is available via internet browser:

https://login.delftblue.tudelft.nl/

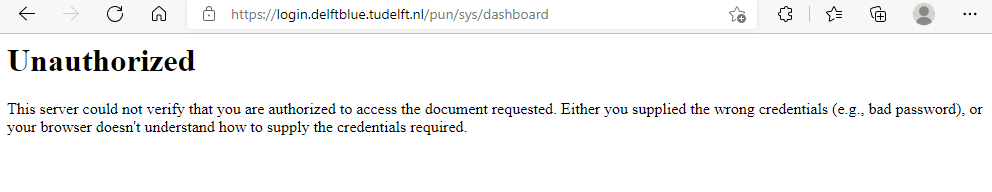

Note: Microsoft Edge might give "Unauthorized" error:

Mozilla Firefox and Google Chrome seem to be working fine:

Home directory as seen via Open OnDemand:

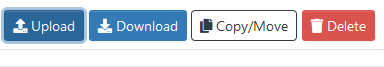

To transfer files to/from DelftBlue, please use the "Upload" and "Download" buttons at the top right corner of the screen:

Folder locations¶

By default, the following drives/folders are available from DelftBlue:

-

DelftBlue home directory:

/home/<netid>Warning

The hard space quota on your

/homedirectory is limited to 30.0 GB! After exceeding this amount, you will not be able to write any new files in the home directory, which includes files generated by already running jobs. -

DelftBlue scratch disk:

/scratch/<netid>Warning

The

/scratchdisk will be periodically purged (all data older than a certain age will be deleted automatically)! Make sure to copy your data to your home directory or your project drive as soon as your job has finished! -

TU Delft network shares:

/tudelft.netWarning

Only available on the login nodes, not the worker nodes!!!

[<netid>@login02 tudelft.net]$ ls -l total 104 drwxr-xr-x 12 root root 4096 Mar 16 15:16 staff-bulk drwxr-xr-x 13 root root 4096 Mar 16 15:16 staff-groups drwxr-xr-x 28 root root 4096 Mar 17 11:18 staff-homes drwxr-xr-x 28 root root 4096 Mar 17 11:18 staff-homes-linux drwxr-xr-x 1674 root root 65536 Mar 17 11:18 staff-umbrella drwxr-xr-x 12 root root 4096 Mar 17 11:18 student-groups drwxr-xr-x 28 root root 4096 Mar 17 11:18 student-homes drwxr-xr-x 28 root root 4096 Mar 17 11:18 student-homes-linuxThese correspond to the most of the available TU Delft data storage solutions.

You can copy files directly to/from your network shares.

Important

Please note: the TU Delft shares use a so-called

Kerberosticket for authentication. When you do not have a valid ticket (i.e. no ticket or an expired ticket), you will receive aPermission denied. AKerberosticket is automatically created for you when you log in using your password (but not when you log in using an SSH key!), and when you run thekinitcommand (and provide your NetID password). AKerberosticket has a limited lifetime of 10 hours, after which it expires. You can renew yourKerberosticket at any time, via the samekinitcommand.

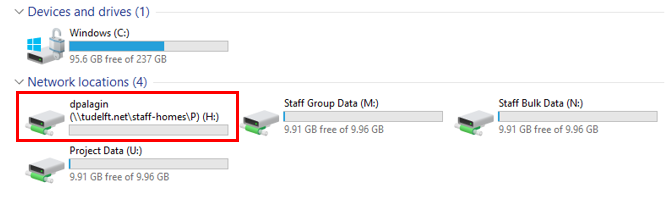

For instance, the following Network drives are available:

Windows network drive¶

In order to access your Windows TU Delft network drive, you have to navigate to:

where "x" is the first letter of your lastname, and <netid> is your account name. For example, if your name is "John Smith" and your username is "jsmith", your Windows home directory will be in the following location:

Important

These folders are not available (ls will return an empty list) until you access them, for example by changing your current working directory to the folder:

This corresponds to the H: drive on your TU Delft Windows laptop/desktop:

Linux network drive¶

Same as above, just navigate to:

Group network drive¶

To access your group's network drive (data storage for departments), use the following location:

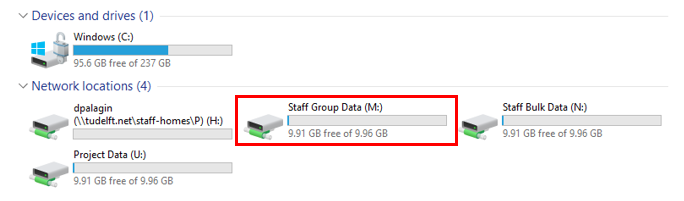

This corresponds to the M: drive on your TU Delft Windows laptop/desktop:

For example, the group NA (Numerical Analysis), which is part of diam (Delft Institute of Applied Mathematics), which is part of ewi (Faculty of Electrical Engineering, Mathematics & Computer Science) has the folder called Shared for members of the group to use:

Bulk data network drive¶

To access the Bulk data network drive, use the following location:

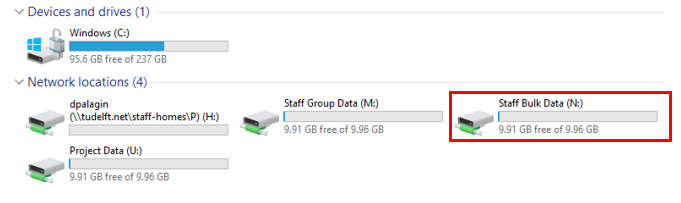

This corresponds to the N: drive on your TU Delft Windows laptop/desktop:

Project data¶

Finally, the most important network drive is staff-umbrella, which corresponds to "Project data". This is designed to store the research data. Your allocation for Project data drive can be requested here.

To access the Project data network drive, use the following location:

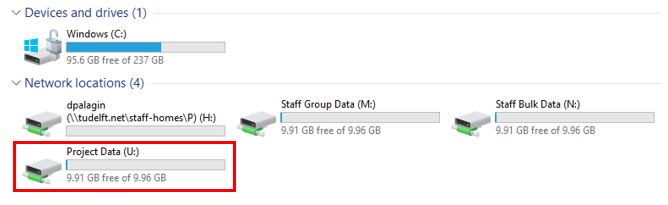

This corresponds to the U: drive on your TU Delft Windows laptop/desktop:

Important

We encourage you to use the Project drive as the main "permanent" storage solution for the data handled on DelftBlue.

Efficient use of the file system¶

Wether you have large input or output data, or simply need a lot of I/O because you are starting

many instances of a serial program, it is good to remember that the /home and /scratch drives

are a shared resource among all users.

Please check the these guidelines for advice on

diligently using the filesystem on DelftBlue.

Quota-related questions and error messages¶

Sometimes, you can can see error messages along the following lines:

Option 1: Your own /home or /scratch is full¶

Check the message that appears when you login (you can also invoke this message by executing the command exec bash). You should see something like this:

user/group || size || chunk files

name | id || used | hard || used | hard

--------------|------||------------|------------||---------|---------

NetID |XXXXXX|| 7.50 GiB| 5.00 TiB|| 193605| 1000000

Quota information for storage pool home (ID: 2):

user/group || size || chunk files

name | id || used | hard || used | hard

--------------|------||------------|------------||---------|---------

NetID |XXXXXX|| 4.81 GiB| 8.00 GiB|| 67344| 1000000

If any of these parameters (size, number of chunks) is exceeded for either /home or /scratch, you should clean up. Chunks are the smallest storage sizes of files. Any file will at least occupy one chunk, i.e. 512 kB.

Option 2: Default /tmp folder is full¶

If this is the case, create your own tmp in /scratch and export the TMPDIR variable to point to that folder:

How do I know how much data I have?¶

There are two important metrics here, the total amount of data (e.g. in gigabytes), and the total number of files.

To check the amount of data, you can use the du command. For example, if I want to see how much data is in each file and sub-folder of the current folder, I use the following command:

$ du -h | sort -h

5.5K gnuplot

7.5K julia

313K coarrays

455K matlab

637K firedrake

699K jupyterlab

6.8M oxDNA_2.4_RJUNE2019.tgz

44M oxDNA

53M tensorflow

267M singularity

558M ansys

761M nvfortran

951M garnet

1.2G apptainer_examples

3.1G turbostream

4.3G cplex

13G fluent

25G .

To check the number of files in all of these folders, I can use, for example, the following command: